Trusted AI

Welcome to the Trusted AI for Universal-perception (TAU) Lab at the University of Shanghai for Science and Technology (USST)!Our research aims to improve the explainability, fairness, privacy, and efficiency of AI models. We are developing machine learning algorithms and systems based on those principles and aspirations, then translating them into real-world applications, such as Robotics, Healthcare field and AI for science.

- [TOP-01/2025] We got a paper accepted by ICLR 2025 (Oral, Top 1.8%)

- [TOP-09/2023] We got a paper accepted by International Journal of Computer Vision (IJCV, IF=19.6)

- [01/2026] We got two papers accepted by ICLR 2026

- [12/2025] We got a papers accepted by Pattern Recognition (PR, IF=7.6)

- [11/2025] We got a papers accepted by IEEE Transaction on Multimedia (IEEE-TMM, IF=9.7)

- [11/2025] We got a papers accepted by AAAI 2026 (Oral, Top 4.3%)

- [09/2025] We got a papers accepted by NeurIPS 2025

- [06/2025] We got a paper accepted by CAAI Transactions on Intelligence Technology (CAAI-TIT, IF=7.3)

- [05/2025] Wenxin Su was admitted to the PhD program at the European Molecular Biology Laboratory (EMBL). Congratulation!

- [02/2025] We got two papers accepted by CVPR 2025

- [12/2024] We got a paper accepted by AAAI 2025

- [11/2024] We got a paper accepted by Medical Image Analysis (MedIA, IF=10.7)

- [11/2024] We got a paper accepted by IEEE Transactions on Circuits and Systems for Video Technology (IEEE-TCSVT, IF=5.2)

- [10/2024] We got a paper accepted by IEEE Transactions on Circuits and Systems I: Regular Papers (IEEE-TCSI, IF=5.2)

- [10/2024] We got a paper accepted by Electronics (IF=2.6)

- [10/2024] Jianxin Gao got postgraduate recommendation to Peking Union Medical College, Tsinghua University. Congratulation!

- [09/2024] We got a paper accepted by NeurIPS 2024

- [08/2024] We got a research project (General Program) funded by NSFC

- [08/2024] We got a paper accepted by IEEE Transactions on Intelligent Transportation Systems (IEEE-TITS, IF=7.9)

- [07/2024] We got a paper accepted by ACM-MM 2024

- [03/2024] Wenxin Su got the Frist Prize Scholarship at USST (Top 5%). Congratulation!

- [02/2024] We got a paper accepted by CVPR 2024

- [01/2024] We got a paper accepted by Expert Systems with Applications (ESWA, IF=8.5)

- [11/2023] We got a research project (General Program) funded by SAST funding

- [10/2023] We got a paper accepted by Robotic Intelligence and Automation (RIA, IF=2.373)

- [09/2023] Wenxin Su got the National Scholarship for Postgraduate Excellence 2023 (Top 1%). Congratulation!

- [09/2023] We got a paper accepted by IEEE Transaction on Multimedia (IEEE-TMM, IF=7.3)

- [09/2023] We got a paper accepted by Pattern Recognition (PR, IF=8.0)

- [05/2023] We got a paper accepted by ICRA 2023

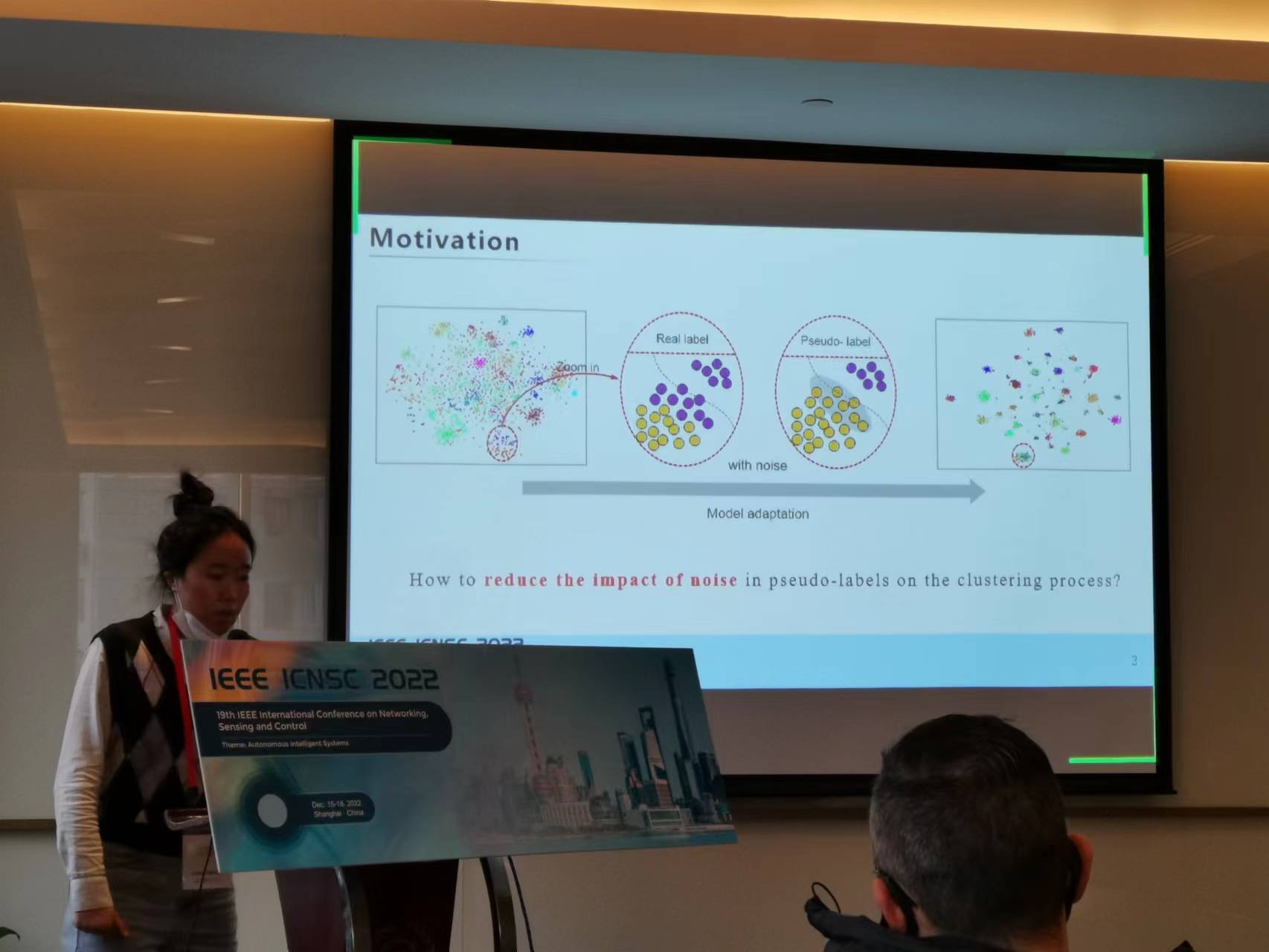

- [12/2022] We got a paper accepted by ICNSC 2022, and the paper was nominated for Best Paper.

- [09/2022] Yan Zou got the National Scholarship for Postgraduate Excellence 2022 (Top 1%). Congratulation!

- [08/2022] We got a paper accepted by Neural Networks (NN, IF=9.657)

- [08/2022] The TAU Lab website is officially running.

Note: * indicates the corresponding author; bold font means the member of TAU Lab.

S. Tang, P. Gong, K. Li, K. Guo, B. Wang, M. Ye, J. Zhang, X. Zhu

International Conference on Learning Representations (ICLR)

R. Fang, J. Zhao, S. Wang, R. Pu, B. Li, J. Cai, Z. Li, Z. Jing, J. Zhu, S. Tang, C. Ling, B. Wang

International Conference on Learning Representations (ICLR)

L. Chen, Y. Bai, Y. Hu, Q. Wang, X. Qi

Pattern Recognition (PR)

Z. Zou, M. Ye, L. Ji, L. Zhou, S. Tang, Y. Gan, S. Li

IEEE Transactions on Multimedia (TMM)

R. Fang, S. Wang, R. Pu, Q. Zeng, H. Zheng, Z. Wang, J. Cai, Z. Mei, S. Tang, C. Ling, B. Wang

Annual AAAI Conference on Artificial Intelligence (AAAI) 2026

N. Li, M. Ye, L. Zhou, S. Li, S. Tang, L. Ji, C. Zhu

Annual Conference on Neural Information Processing Systems (NeurIPS) 2025

S. Tang, W. Su, Y. Yang, L. Chen and M. Ye

CAAI Transactions on Intelligence Technology (CTIT) (IF = 7.3)

W. Su, S. Tang*, X. Liu, X. Yi, M. Ye, C. Zu, J. Li, X. Zhu

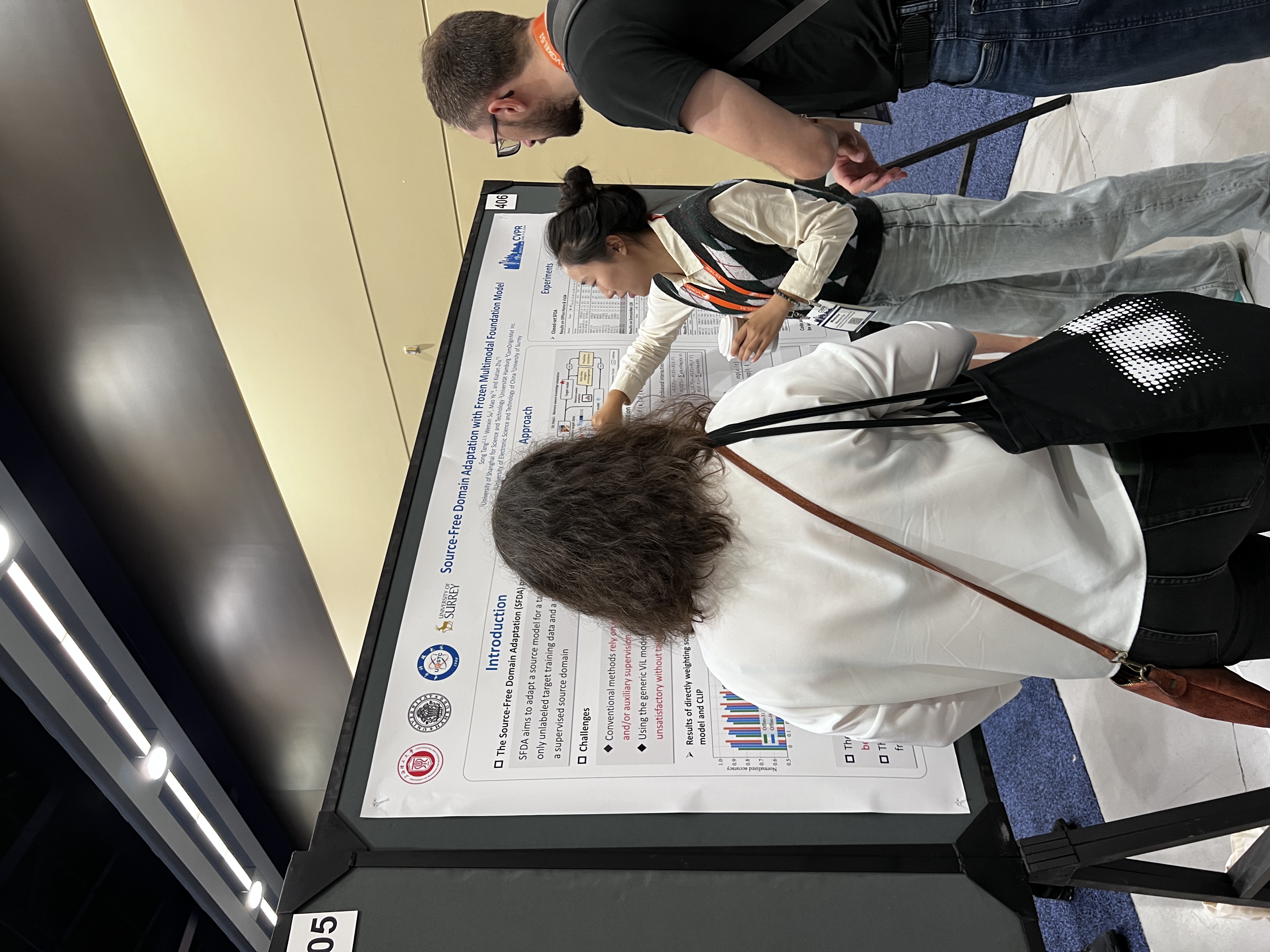

IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 2025

T. Li, M. Ye, T. Wu, N. Li, S. Li, S. Tang, L. Ji

IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 2025

Song Tang, Wenxin Su, Y. Gan, M. Ye, J. Zhang, X. Zhu

International Conference on Learning Representations (ICLR) 2025

N. Li, M. Ye, L. Zhou, Song Tang, Y. Gan, Z. Liang, X. Zhu

Annual AAAI Conference on Artificial Intelligence (AAAI) 2025

S. Tang, S. Yan, X. Qi, J. Gao, M. Ye, J. Zhang, X. Zhu

Medical Image Analysis

Y. Gan, C. Wu, D. Ouyang, S. Tang, M. Ye and T. Xiang

IEEE Transactions on Circuits and Systems for Video Technology

J. Luo, L. Chen*, K. Shi, S. Tang, R, Zhang, J. Cheng and J.H. Park

IEEE Transactions on Circuits and Systems I: Regular Papers

L. Chen, M. Yu, G. Kou and J. Luo*

Electronics

S. Li, M. Ye, H. Li, N. Li, S, Xiao, S. Tang and Xiatian Zhu

Conference on Neural Information Processing Systems (NeurIPS) 2024

S. Li, M. Ye, L. Ji, S. Tang, Yan Gan and Xiatian Zhu

IEEE Transactions on Intelligent Transportation Systems

S. Xiao, M. Ye, Q. He, S. Li, S. Tang and X. Zhu

ACM Multimedia (ACM-MM), 2024.

S. Tang, W. Su, M. Ye and X. Zhu

IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2024.

J. Liu, J. Cui, M. Ye, X. Zhu and S. Tang

Expert Systems with Applications (ESWA), Jan. 2024. (IF = 8.5)

H. Sun, S. Tang*, X. Qi, Z. Ma and J. Gao

Robotic Intelligence and Automation (RIA), Oct. 2023. (IF = 2.373)

S. Tang, A. Chang, F. Zhang, X. Zhu, M. Ye and C. Zhang

International Journal of Computer Vision (IJCV), Oct. 2023, DOI:10.1007/s11263-023-01892-w. (IF = 19.6)

S. Tang, Y. Shi, Z. Song, M. Ye, C. Zhang and Jianwei Zhang

IEEE Transactions on Multimedia (TMM), Oct. 2023, DOI:10.1109/TMM.2023.3321421. (IF = 7.3)

L. Zhou, N. Li, M. Ye, X. Zhu, S. Tang

Pattern Recognition (PR), Sep. 2023, DOI:10.1016/j.patcog.2023.109974. (IF = 8.0)

J. Mi, S. Tang, Z. Ma, D. Liu and J. Zhang

IEEE International Conference on Robotics and Automation (ICRA) 2023

Wenxin Su (M.S. student)

Shaxu Yan (M.S. student)

Yunxiang Bai (M.S. student)

Chunxiao Zu (M.S. student)

Jiuzheng Yang (M.S. student)

Mingchu Yu (M.S. student, Visiting)

Aliu Shi (M.S. student, Visiting)

Kai Guo (M.S. student)

Peihao Gong (M.S. student)

- Yan Zhou (2023 M.S. graduated), USST ----> Studying toward his Ph.D. at Harbin Institute of Technology(HIT)

- Yuji Shi (2023 M.S. graduated), USST ----> Studying toward her Ph.D. at Huazhong University Of Science And Technology(HUST)

- Zihao Song (2023 M.S. graduated), USST ----> Working in Software patent industry in Zhengzhou

- Yan Yang (2023 M.S. graduated), USST ----> Working in the medical AI industry in Shanghai